Representation of Undirected Graphical Model

Published:

This page’s Markdown is generated via pandoc from LaTex. If you feel more comfortable with a LaTex layout, please check here. The original Tex file is also available here.

1. Review

There are several important concepts and theorems introduced in last lecture about Directed Graphical Models.

Local independence: Indicate that in a directed graph, each variable is independent to its nondescendants given its parent.

Global independence: The global independence is given by d-seperation. Note that there is no need to consider too much about global and local things, you can call them whatever you want.

A fully connected DAG is an I-map of any distribution, since for any .

Minimal I-map: A DAG is a minimal I-map of , if the removal of even a single edge from renders it not an I-map.

A distribution may have several I-maps.

P-map: A DAG is a perfect map (p-map) of a distribution if

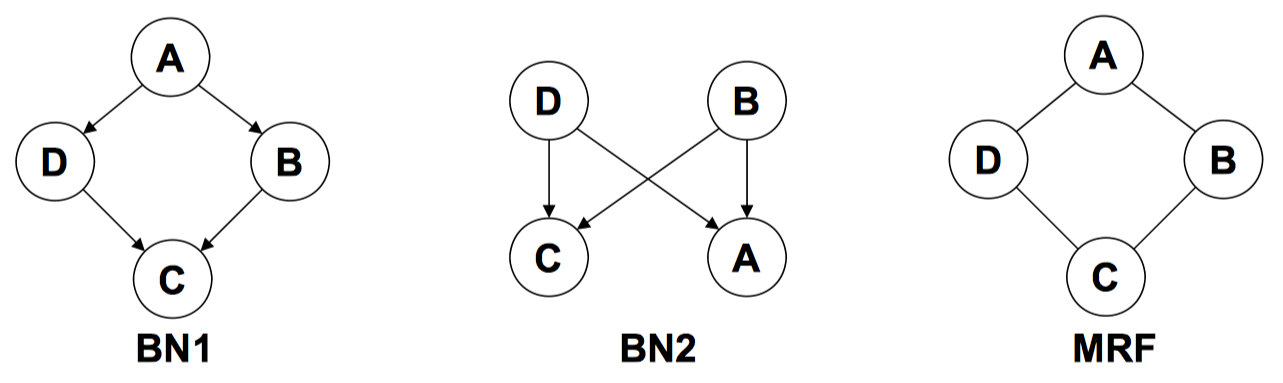

Note that not every distribution has a perfect map as DAG. Here is an example:

BN1 wrongly says , BN2 wrongly says

It is impossible for a DAG to capture both of the two independences at same time. The main reason is that the directed model (sometimes) encodes more independences together with the one we want. Thus, there is a portion of the space of distribution that we cannot encode with a DGM. That motivates another type of graphical model: undirected graphical models, aka Markov Random Fields.

2. Undirected Graphical Models

UGMs are very similar to DGMs in structure; but the directed or undirected edges encode differently. The directed model encodes causal relationship between nodes, while UGMs captures pairwise relationship which represents correlation between nodes, rough affinity.

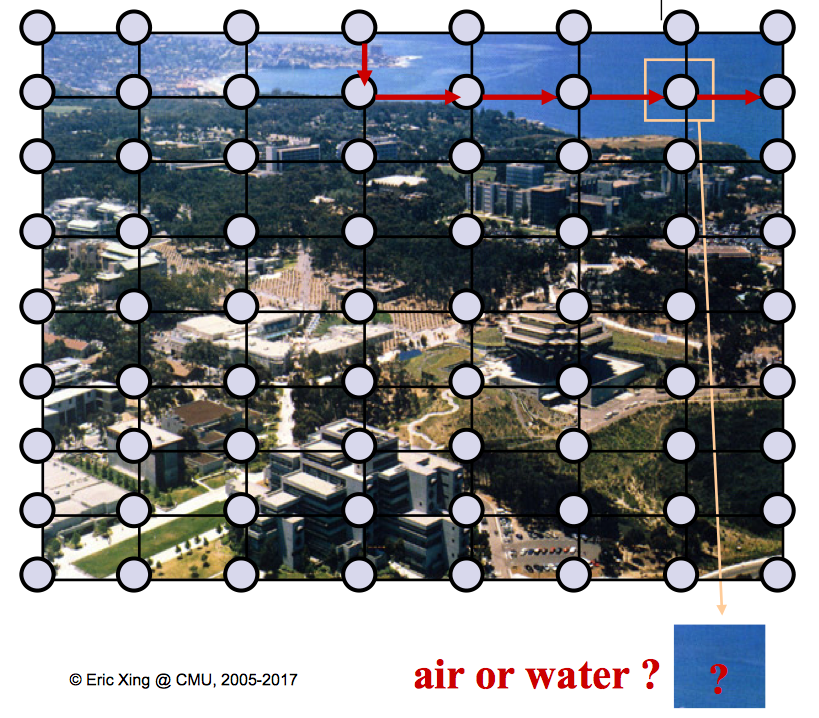

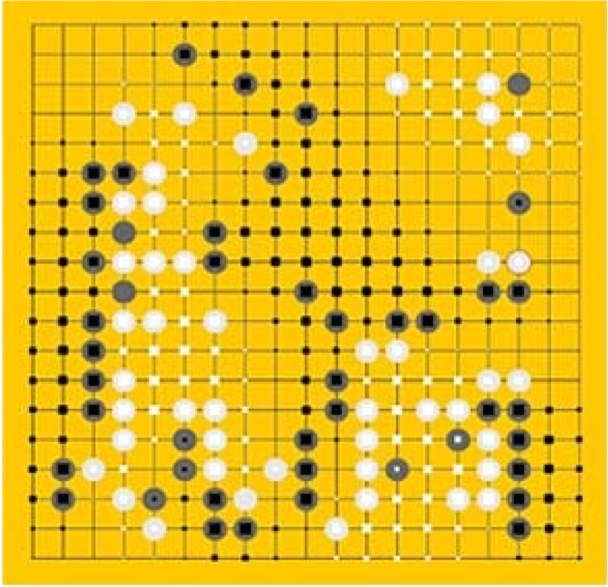

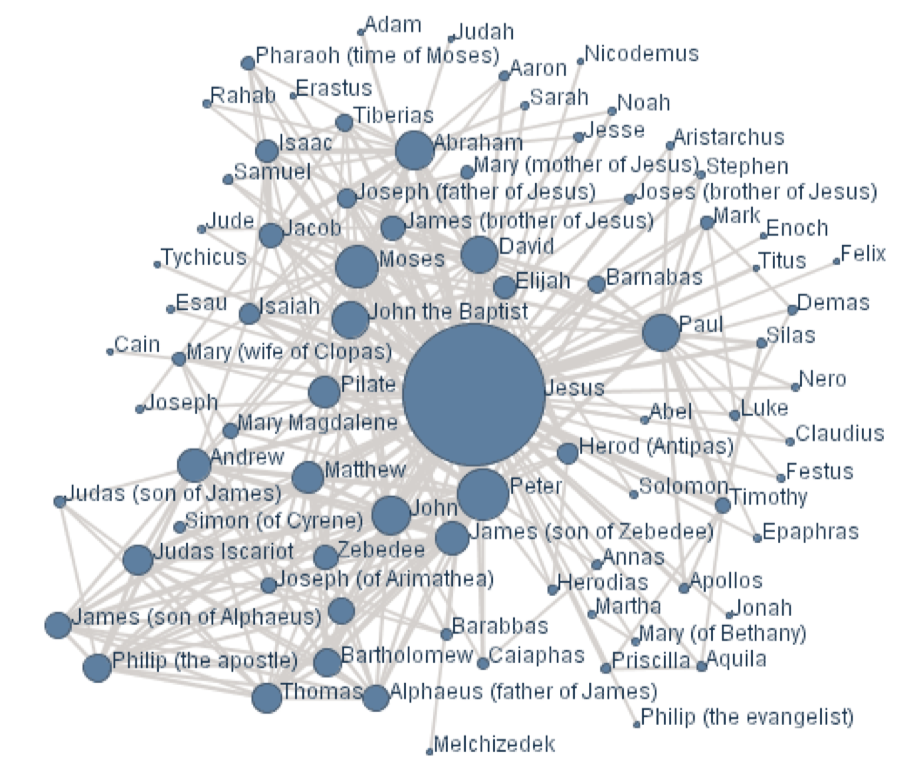

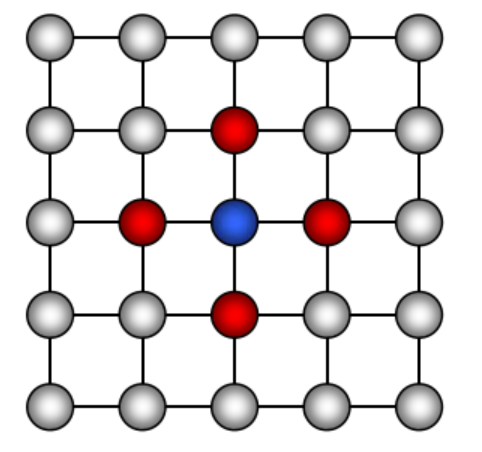

Many things can be modeled as a UGM, such as a photo—each pixel can be a node, a go game—the grid chessboard seems intuitive, or even social networks, as shown in figure 2.

3. Representation

Definition an undirected graphical model represents a distribution defined by an undirected graph , and a set of positive potential functions associated with the cliques of , s.t.

where is known as a partition function:

The potential function can be understood as an contingency function of its arguments assigning “pre-probabilistic” score of their joint configuration. We call this of distribution in equation above as Gibbs distribution, as Definition 4.3 in Koller textbook. And the potential function is defined as factor in Koller textbook.

Definition For , a complete subgraph (clique) is a subgraph such that nodes in are fully interconnected.A (maximal) clique is a complete subgraph s.t. any superset is not complete.

Interpretation of Clique Potentials

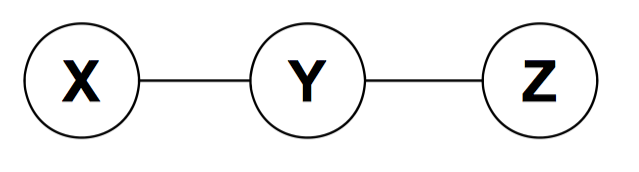

The model implies . This independence statement implies (by definition) that the joint must factorize as:

We can write this as

or

However, we cannot have all potentials be marginals and cannot have all potentials be conditionals.

The positive clique potentials can only be thought of as general “compatibility”, “goodness” or “happiness” functions over their variables, but not as probability distributions.

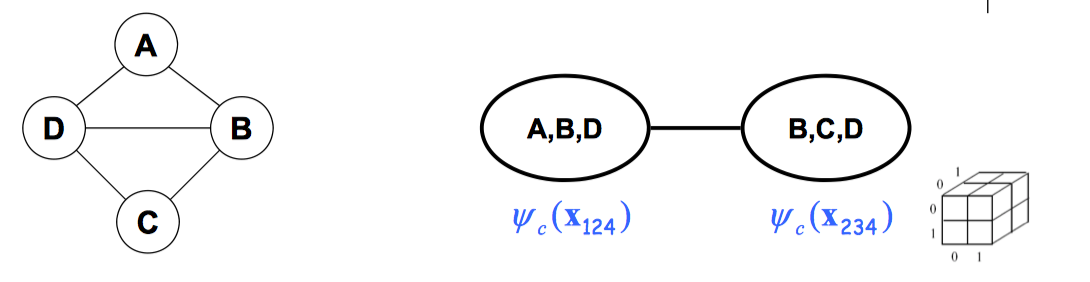

Example UGM — using max cliques

Here we’ll use an example to show an UGM.

We can factorize the graph into two max cliques:

We can represent as two 3D tables instead of one 4D table.

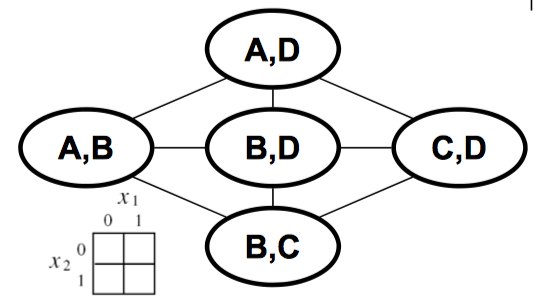

Using subcliques

In this example, the distribution factorized over the subcliques.

Example UGM — canonical representation

A canonical representation of such a graph can be expressed as:

4. Independence properties

Global independence

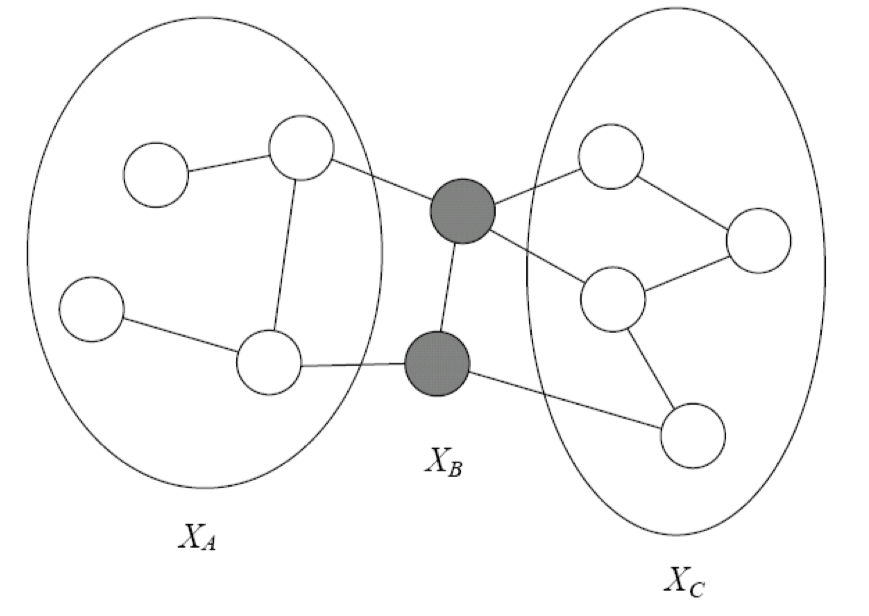

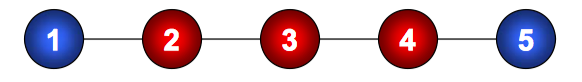

Definition A set of nodes separates and in , denoted , if there is no active path between any node and given . Global independences associated with are defined as:

In Figure 3, B separates A and C if every path from a node in A to a node in C passes through a node in B. It is written as sepH(A : C|B). A probability distribution satisfies the global Markov property if for any disjoint A,B,C such that B separates A and C, A is independent of C given B.

Local independence

Definition For each node , there is unique Markov blanket of , denoted , which is the set of neighbors of in the graph (those that share an edge with )

Definition The local Markov independencies associated with H is:

In other words, X i is independent of the rest of the nodes in the graph given its immediate neighbors.

Note that, based on the local independence:

Soundness and completeness of global Markov property

Definition An UG is an I-map for a distribution if , i.e., entails .

Definition P is a Gibbs distribution over H if it can be represented as

Theorem (soundness): If is a Gibbs distribution over , then is an I-map of .

Theorem (Completeness): If and are not separated given in (), then and are dependent given , in some distribution represented as () that factorizes over .

The proof of the theorems are available on Koller textbook.

Other Markov properties

For directed graphs, we defined I-maps in terms of local Markov properties, and derived global independence.For undirected graphs, we defined I-maps in terms of global Markov properties, and will now derive local independence.

The pairwise Markov independencies associated with UG are

For example, in figure 5, we have the following independence

Relationship between local and global Markov properties

For any Markov Network H, and any distribution P, we have that if then

For any Markov Network H, and any distribution P, we have that if then

Let P be a positive distribution. If , then

The following three statements are equivalent for a positive distribution P:

Above equivalence relies on the positivity assumption of . For nonpositive distributions, there are examples of distributions , there are examples which satisfies one of these properties, but not the stronger property.

Perfect maps

Definition A Markov network is a perfect map for if for any ; ; we have that

Note that, just like DMs, not every distribution has a perfect map as UGM.

Exponential Form

Constraining clique potentials to be positive could be inconvenient (e.g., the interactions between a pair of atoms can be either attractive or repulsive). We represent a clique potential in an unconstrained form using a real-value “energy” function :

Thus, this gives the joint distribution an additive structure:

where the is called the “free energy”.

The exponential ensures that the distribution is positive. In physics, this is called the “Boltzmann distribution”.In statistics, this is called a log-linear model (as Koller textbook introduces).

Leave a Comment